The synthetic intelligence gear used to undress with out consent have been created to focus on ladies. These nude deepfakes do not paintings on males.

The manipulation of pictures and movies to create sexually oriented content material is nearer to being thought to be a prison offence in all European Union international locations.

The first directive on violence towards ladies will undergo its ultimate approval level in April 2024.

Through synthetic intelligence programmes, those photographs are being manipulated to undress ladies with out their consent.

But what’s going to trade with this new directive? And what occurs if ladies residing within the European Union are the sufferers of manipulation performed in international locations outdoor the European Union?

The sufferers

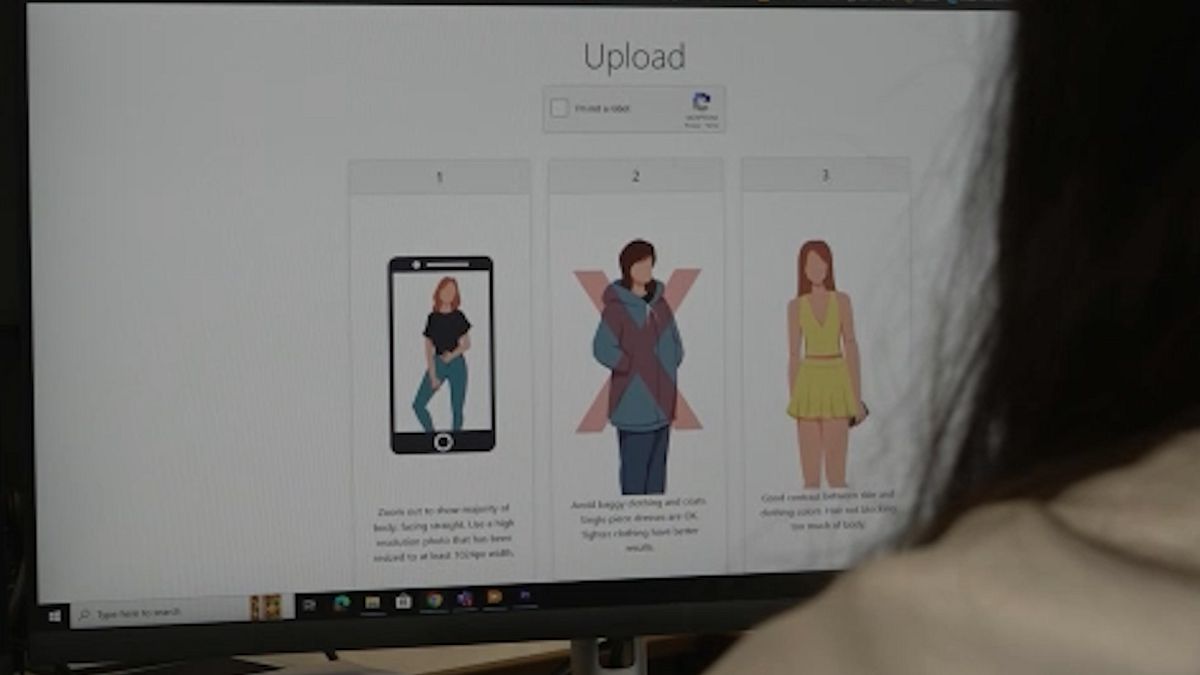

Websites that let sexual deepfakes to be created are only a click on away on any seek engine and freed from fee.

Creating a sexual deepfake takes not up to 25 mins and prices not anything, the use of just a {photograph} wherein the face is obviously visual, in keeping with the 2023 State of Deepfakes learn about.

In the pattern of greater than 95,000 deepfake movies analysed between 2019 and 2023, the learn about unearths that there was a 550% build up.

According to Henry Ajder, an AI and Deepfakes knowledgeable, those that use those stripping gear search to “defame, humiliate, traumatise and, in some cases, sexual gratification”.

The creators of nude deepfakes search for their sufferers’ pictures “anywhere and everywhere”.

“It could be from your Instagram account, your Facebook account, your WhatsApp profile picture,” says Amanda Manyame, Digital Law and Rights Advisor at Equality Now.

Prevention

When ladies come throughout nude deepfakes of themselves, questions on prevention get up.

However, the solution isn’t prevention, however swift motion to take away them, in keeping with a cybersecurity knowledgeable.

“I’m seeing that trend, but it’s like a natural trend any time something digital happens, where people say don’t put images of you online, but if you want to push the idea further is like, don’t go out on the street because you can have an accident,” explains Rayna Stamboliyska.

“Unfortunately, cybersecurity can’t help you much here because it’s all a question of dismantling the dissemination network and removing that content altogether,” the cybersecurity knowledgeable provides.

Currently, sufferers of nude deepfakes depend on a variety of regulations such because the European Union’s privateness regulation, the General Data Protection Regulation and nationwide defamation regulations to offer protection to themselves.

When confronted with this sort of offence, sufferers are prompt to take a screenshot or video recording of the content material and use it as proof to file it to the social media platform itself and the police.

The Digital Law and Rights Advisor at Equality Now provides: “There is also a platform called StopNCII, or Stop Non-Consensual Abuse of Private Images, where you can report an image of yourself and then the website creates what is called a ‘hash’ of the content. And then, AI is then used to automatically have the content taken down across multiple platforms.”

Global development

With this proposed new directive to struggle violence towards ladies, all 27 member states may have the similar set of regulations to criminalise essentially the most numerous sorts of cyber-violence reminiscent of sexually particular “deepfakes”.

However, reporting this sort of offence could be a difficult procedure.

“The problem is that you might have a victim who is in Brussels. You’ve got the perpetrator who is in California, in the US, and you’ve got the server, which is holding the content in maybe, let’s say, Ireland. So, it becomes a global problem because you are dealing with different countries,” explains Amanda Manyame.

Faced with this example, the MEP for S&D and co-author of the brand new directive explains that “what needs to be done in parallel with the directive” is to extend cooperation with different international locations, “because that’s the only way we can also combat crime that does not see any boundaries.”

Evin Incir additionally admits: “Unfortunately, AI technology is developing very fast, which means that also our legislation needs to keep up. So we would need to revise the directive in this soon. It is an important step for the current state, but we will need to keep up with the development of AI.”